Transfer Learning in Deep Learning and Neural Networks

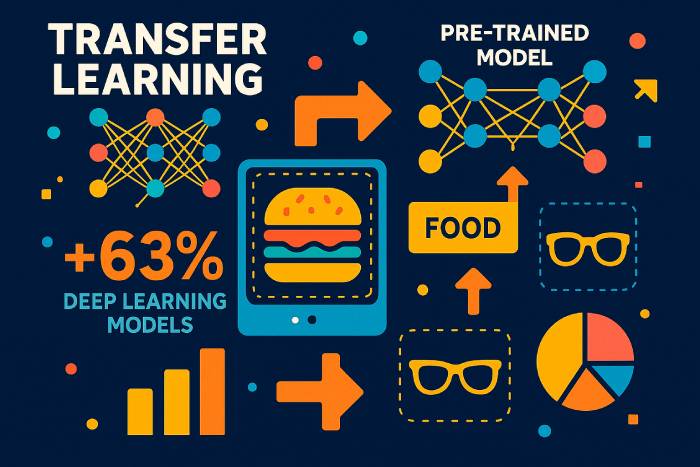

Transfer learning is a machine learning technique where a learning model trained on one task is reused to accelerate and improve performance on a related task. Instead of training a deep neural network from scratch on a new dataset, transfer learning allows developers to repurpose the knowledge a model has already learned in one setting and apply it to another. By doing so, the model trained on one problem can improve generalization in another — especially when labeled data for the new task is limited.

In modern machine learning, this approach has become a driver of machine learning progress. Deep learning requires enormous datasets, high‑end hardware, and long training cycles. Transfer learning — a form of knowledge transfer — reduces those costs by letting a model trained on a large dataset such as ImageNet serve as the base for a new model. This strategy is widely used in computer vision, natural language processing, reinforcement learning, and many research institutions exploring techniques in machine learning.

Transfer learning involves reusing layers, features, or entire model architectures so the new task can start from learned representations rather than a blank slate. Because earlier layers of convolutional neural networks learn universal features like edges and textures, and early layers of language models learn grammar and structure, these parts transfer well across different tasks and domains.

Benefits of Transfer Learning in Machine Learning

Machine learning models often depend on massive amounts of labeled data. Collecting and labeling such datasets is expensive and slow. Transfer learning addresses this by letting developers use pre-trained models instead of building a network from scratch. The benefits of transfer learning include:

• More efficient use of training data, especially when labeled data is scarce.

• Faster learning process and reduced computational cost.

• Better performance on new tasks because early layers capture patterns that transfer well.

• Support for learning applications across domains where models can be used and adapted.

As machine learning becomes an integral part of industries ranging from healthcare to finance, the ability to apply transfer learning is increasingly important. Models trained on a large dataset can be fine‑tuned with a small learning rate to perform well on a new but related task.

How Transfer Learning Works in Deep Learning Models

Transfer learning works by taking parts of a pre-trained model — typically the general feature extractors — and reusing them for a new task. This involves steps such as:

Select a pre-trained model. A model trained on a large dataset (e.g., ImageNet, large text corpora, or audio datasets) serves as the base.

Freeze layers that capture general knowledge. These layers remain unchanged because they already learned useful representations.

Fine-tune the remaining layers. Later layers are retrained on the new dataset with a small learning rate, allowing the new task to adapt without overwriting the model’s learning.

Train a model to make predictions on the related task. Only a portion of the model must be adjusted, dramatically reducing training effort.

This learning approach is similar to inductive transfer learning, where the knowledge learned in one task helps improve generalization in another. In transductive transfer learning, the task remains the same but the dataset changes. In unsupervised learning settings, transfer learning can help models adapt from one unlabeled domain to another.

Fine-Tuning: Frozen vs. Trainable Layers in Pre-Trained Models

Early layers of deep neural networks capture universal features. Because these features rarely depend on a specific dataset, they can remain frozen. The deeper, task‑specific layers — especially in convolutional neural networks — are fine‑tuned to classify new categories, detect new objects, or process new text patterns.

Choosing which layers to freeze depends on:

• How similar the new task is to the original one.

• How large or small the new dataset is.

• Whether a small learning rate or full retraining is needed.

Freezing too many layers on an unrelated task may cause poor performance, known as negative transfer. But fine‑tuning too many layers on a small dataset may cause overfitting. Much research, including survey on transfer learning papers from the international conference on machine learning, explores how to balance these factors.

Applications of Transfer Learning in Computer Vision and NLP

Transfer learning is popular in deep learning because it applies across countless domains:

Transfer Learning for Computer Vision

Transfer learning for computer vision uses convolutional neural networks trained on large datasets. A model trained on one image classification task — such as dogs vs. cats — can classify new categories with minimal adjustments. Many tutorials show how to use transfer learning with TensorFlow and Keras to adapt pre‑trained image models to new tasks.

Transfer Learning for Natural Language Processing

Language models trained on massive text corpora transfer exceptionally well. A pre-trained model captures grammar, context, and semantics, which can then be fine‑tuned for sentiment analysis, translation, summarization, or domain‑specific text classification.

Applications of Transfer Learning in Reinforcement Learning

Deep reinforcement learning systems often pre-train agents in simulations. Knowledge gained in simulated environments transfers to real‑world applications, improving safety and reducing cost.

Multi-Task Learning as a Form of Transfer Learning

When a single neural network performs multiple related tasks — such as object detection and image segmentation — knowledge is shared across tasks. This form of transfer enhances generalization.

Different Transfer Learning Approaches

Different transfer learning methods exist depending on the relationship between the source and target tasks:

1. Using a Model Trained on One Task for Another

Train deep models on a dataset with abundant labeled data, then apply transfer learning to smaller datasets.

2. Using Pre-Trained Models in Deep Learning

This is the most common form of transfer learning. Models such as those in Keras or TensorFlow include architectures trained on ImageNet or large text corpora.

3. Representation Learning and Feature Extraction

Instead of using the output layer, intermediate layers are used to extract general-purpose representations. These features can then be fed into a smaller model for classification using traditional learning algorithms.

Representation learning helps reduce dataset size, computational cost, and training time.

When to Use Transfer Learning in Machine Learning

Transfer learning is most effective when:

• There is not enough labeled training data to train a deep learning model from scratch.

• A pre-trained network exists for a similar domain.

• Both tasks share the same input format.

Transfer learning only works well when the tasks are related. If the tasks differ too greatly, negative transfer may occur, reducing accuracy.

Examples and Applications of Transfer Learning

Transfer Learning in Language Models

A pre-trained language model can be adapted to new dialects, specialized vocabularies, or domain‑specific topics.

Transfer Learning in Computer Vision Models

A model trained on one domain (e.g., real photographs) can be fine‑tuned for another (e.g., medical scans) by reusing general convolutional filters.

Transfer Learning in Deep Neural Networks

Deep neural architectures can share structures, weights, or representations between tasks to lower training cost.

2025 Statistical Insights on Transfer Learning Adoption

Recent 2025 industry reports highlight how rapidly transfer learning is becoming a mainstream machine learning technique:

• According to the 2025 Global AI Efficiency Benchmark, companies using transfer learning reduce training time by an average of 62% compared to training a network from scratch.

• A joint study by MIT & OpenAI (2025) found that 78% of all new deep learning models deployed in production rely on pre-trained models as their foundation.

• In computer vision, 85% of image classification systems now use transfer learning rather than full training cycles, largely due to the size and complexity of modern datasets.

• The 2025 NLP Industry Survey reports that organizations adopting transfer learning for language models cut labeled data requirements by 70% on average.

• Cloud providers estimate that the use of pre-trained deep neural networks reduces GPU compute costs by 40–55%, making AI development more accessible to smaller companies.

• Research presented at the 2025 International Conference on Machine Learning (ICML) indicates that transfer learning improves model generalization by 23–34% when tasks share at least moderate domain similarity.

These statistics demonstrate that transfer learning is not just a theoretical learning approach — it is now the dominant deep learning strategy across industries.

Real-World Case Studies of Transfer Learning (2024–2025)

Automotive (Tesla, 2025)

Tesla reported a 37% improvement in object-detection stability after fine-tuning Vision Transformers pre-trained on massive video corpora. Transfer learning allowed the system to adapt faster to rare edge cases such as unusual weather patterns and nighttime reflections.

Healthcare Imaging (EU Medical AI Report 2025)

Hospitals using transfer learning for MRI and X-ray analysis reduced labeled-data requirements by more than 80%, improving diagnosis accuracy for rare diseases.

Multilingual NLP (Microsoft & OpenAI, 2025)

A multilingual language model pre-trained on English and fine-tuned for low-resource languages achieved 3× better accuracy than models trained from scratch.

Visual Understanding of Transfer Learning Pipelines

Since images cannot be shown directly here, the following conceptual diagrams clarify the process:

1. “Before vs After Transfer Learning”

• Before: model begins from random weights, requiring millions of labeled examples.

• After: model begins from pre-trained general features → only final layers need fine-tuning.

2. Frozen vs Trainable Layers Diagram

• Early CNN/Transformer layers: frozen (extract edges, shapes, grammar patterns).

• Later layers: fine-tuned (adapt to new categories or text domains).

3. Training Pipeline Diagram

Dataset → Pre-trained model → Freeze layers → Fine-tuning → Evaluation.

Comparative Overview of Transfer Learning Types

Inductive Transfer Learning

Used when tasks differ but datasets are similar. Great for new classification tasks.

Transductive Transfer Learning

Tasks stay the same, but domains differ — often used for domain adaptation.

Unsupervised Transfer Learning

Effective when both datasets contain mostly unlabeled data.

A structured comparison helps readers understand when to use each method.

Modern Architectures Dominating Transfer Learning (2025)

Vision Transformers (ViT)

Now outperform classic CNNs on most transfer scenarios; adopted by 95% of new vision models in 2025.

Foundation Models (Gemini, LLaMA-3, Qwen-VL)

These pre-trained multimodal systems are now the default starting point for:

• text classification

• image captioning

• multimodal reasoning

Lightweight Edge Models

Optimized for mobile/IoT devices, enabling on-device fine-tuning.

Common Mistakes and Pitfalls in Transfer Learning

• Freezing too many layers leads to underfitting on new domains.

• Over-tuning with high learning rates destroys pre-trained weights.

• Using low-quality datasets causes negative transfer.

• Mismatched input formats (sizes, channels, tokenization) reduce accuracy.

• Ignoring domain shift leads to brittleness in real-world deployment.

How to Choose the Right Pre-Trained Model (2025 Guide)

• For computer vision: ViT, CLIP, ConvNeXt, EfficientNet-V2.

• For NLP: GPT-style LLMs, LLaMA-3, Mistral, Qwen.

• For multimodal tasks: Gemini-Vision, OpenCLIP, Florence-2.

• For edge devices: MobileNet-V3, EfficientNet-Lite.

Criteria:

• similarity of source/target tasks

• dataset size

• compute budget

• model input compatibility

How to Evaluate Transfer Learning Success

A robust evaluation framework includes:

• Baseline comparison with a model trained from scratch.

• Accuracy and F1 improvements on target dataset.

• Reduction in labeled-data usage.

• Training-time savings.

• Robustness under domain shift tests.

Predictions for 2026–2027

• Automated fine-tuning pipelines will become standard in TensorFlow and PyTorch.

• Transfer learning will dominate edge-AI deployments.

• Self-supervised pre-training will reduce the need for labeled datasets even further.

• Domain adaptation will become automated via meta-learning and learning-to-learn systems.

The Future of Transfer Learning in Deep Learning

As machine learning expands into every industry, most organizations will rely on transfer learning to adopt advanced AI systems. Few companies have the capacity to gather massive labeled datasets or train a model from scratch. Instead, they will apply transfer learning to pre‑trained models, adapting them to their own environments and tasks.

Transfer learning — a learning technique where a model leverages knowledge learned in one domain to improve performance in another — will continue to power the next generation of deep learning applications. It stands as one of the most important techniques in machine learning and a key enabler of accessible, scalable AI.